DataClass¶

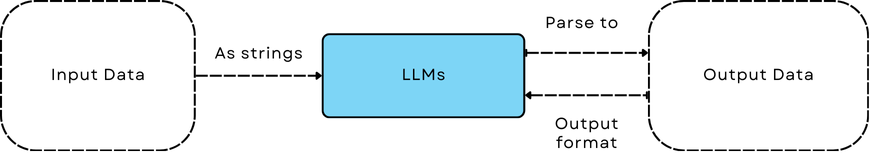

In LLM applications, data constantly needs to interact with LLMs in the form of strings via prompt and be parsed back to structured data from LLMs’ text prediction.

DataClass is designed to ease this data interaction with LLMs via prompt(input) and to parse the text prediction(output).

It is even more convenient to use together with Parser to parse the output from LLMs.

DataClass is to ease the data interaction with LLMs.¶

Design¶

In Python, data is typically represented as a class with attributes.

To interact with LLM, we need great way to describe the data format and the data instance to LLMs and be able to convert back to data instance from the text prediction.

This overlaps with the serialization and deserialization of the data in the conventional programming.

Packages like Pydantic or Marshmallow can covers the seralization and deserialization, but it will end up with more complexity and less transparency to users.

LLM prompts are known to be sensitive, the details, controllability, and transparency of the data format are crucial here.

We eventually created a base class DataClass to handle data that will interact with LLMs, which builds on top of Python’s native dataclasses module.

Here are our reasoning:

dataclassesmodule is lightweight, flexible, and is already widely used in Python for data classes.Using

field(metadata, default, default_factory) in dataclasses adds more ways to describe the data.asdict()from dataclasses is already good at converting a data class instance to a dictionary for serialization.Getting data class schmea for data class is feasible.

Here is how users typically use the dataclasses module:

from dataclasses import dataclass, field

@dataclass

class TrecData:

question: str = field(

metadata={"desc": "The question asked by the user"}

) # Required field, you have to provide the question field at the instantiation

label: int = field(

metadata={"desc": "The label of the question"}, default=0

) # Optional field

DataClass covers the following:

Generate the class

schemaandsignature(less verbose) to describe the data format to LLMs.Convert the data instance to a json or yaml string to show the data example to LLMs.

Load the data instance from a json or yaml string to get the data instance back to be processed in the program.

We also made the effort to provide more control:

Keep the ordering of your data fields. We provided

required_fieldwithdefault_factoryto mark the field as required even if it is after optional fields. We also has to do customization to preserve their ordering while being converted to dictionary, json and yaml string.Signal the output/input fields. We allow you to use

__output_fields__and__input_fields__to explicitly signal the output and input fields. (1) It can be a subset of the fields in the data class. (2) You can specify the ordering in the __output_fields__.Exclude some fields from the output. All serialization methods support exclude parameter to exclude some fields even for nested dataclasses.

Allow nested dataclasses, lists, and dictionaries. All methods support nested dataclasses, lists, and dictionaries.

Easy to use with Output parser. It works well with output parsers such as

JsonOutputParser,YamlOutputParser, andDataClassParser. You can refer to :doc:`Parser<output_parsers>`for more details.

Describing the Data Format (Data Class)¶

Name |

Description |

|---|---|

|

A list of fields that are input fields. |

|

Used more often than |

|

Generate a JSON schema which is more detailed than the signature. |

|

Generate a JSON schema string which is more detailed than the signature. |

|

Generate a YAML signature for the class from descriptions in metadata. |

|

Generate a JSON signature (JSON string) for the class from descriptions in metadata. |

|

Generate data format string, covers |

Work with Data Instance¶

Name |

Description |

|---|---|

|

Create a dataclass instance from a dictionary. Supports nested dataclasses, lists, and dictionaries. |

|

Convert a dataclass object to a dictionary. Supports nested dataclasses, lists, and dictionaries. Allows exclusion of specific fields. |

|

Convert the dataclass instance to a JSON object, maintaining the order of fields. |

|

Convert the dataclass instance to a JSON string, maintaining the order of fields. |

|

Convert the dataclass instance to a YAML object, maintaining the order of fields. |

|

Convert the dataclass instance to a YAML string, maintaining the order of fields. |

|

Create a dataclass instance from a JSON string. |

|

Create a dataclass instance from a YAML string. |

|

Generate data examples string, covers |

We have DataclassFormatType to specify the format type for the data format methods.

Note

To use DataClass, you have to decorate your class with the dataclass decorator from the dataclasses module.

DataClass in Action¶

Say you have a few of TrecData structued as follows that you want to engage with LLMs:

from dataclasses import dataclass, field

@dataclass

class Question:

question: str = field(

metadata={"desc": "The question asked by the user"}

)

metadata: dict = field(

metadata={"desc": "The metadata of the question"}, default_factory=dict

)

@dataclass

class TrecData:

question: Question = field(

metadata={"desc": "The question asked by the user"}

) # Required field, you have to provide the question field at the instantiation

label: int = field(

metadata={"desc": "The label of the question"}, default=0

) # Optional field

Describe the data format to LLMs¶

We will create TrecData2 class that subclasses from DataClass.

You decide to add a field metadata to the TrecData class to store the metadata of the question.

For your own reason, you want metadata to be a required field and you want to keep the ordering of your fields while being converted to strings.

DataClass will help you achieve this using required_field on the default_factory of the field.

Normally, this is not possible with the native dataclasses module as it will raise an error if you put a required field after an optional field.

Note

Order of the fields matter as in a typical Chain of Thought, we want the reasoning/thought field to be in the output ahead of the answer.

from adalflow.core import DataClass, required_field

@dataclass

class TrecData2(DataClass):

question: Question = field(

metadata={"desc": "The question asked by the user"}

) # Required field, you have to provide the question field at the instantiation

label: int = field(

metadata={"desc": "The label of the question"}, default=0

) # Optional field

metadata: dict = field(

metadata={"desc": "The metadata of the question"}, default_factory=required_field()

) # required field

Schema

Now, let us see the schema of the TrecData2 class:

print(TrecData2.to_schema())

The output will be:

{

"type": "TrecData2",

"properties": {

"question": {

"type": "{'type': 'Question', 'properties': {'question': {'type': 'str', 'desc': 'The question asked by the user'}, 'metadata': {'type': 'dict', 'desc': 'The metadata of the question'}}, 'required': ['question']}",

"desc": "The question asked by the user",

},

"label": {"type": "int", "desc": "The label of the question"},

"metadata": {"type": "dict", "desc": "The metadata of the question"},

},

"required": ["question", "metadata"],

}

As you can see, it handles the nested dataclass Question and the required field metadata correctly.

Note

Optional type hint will not affect the field’s required status. We recommend you not to use it in the dataclasses module especially when you are nesting many levels of dataclasses. It might end up confusing the LLMs.

Signature

As schema can be rather verbose, and sometimes it works better to be more concise, and to mimick the output data structure that you want.

Say, you want LLM to generate a yaml or json string and later you can convert it back to a dictionary or even your data instance.

We can do so using the signature:

print(TrecData2.to_json_signature())

The json signature output will be:

{

"question": "The question asked by the user ({'type': 'Question', 'properties': {'question': {'type': 'str', 'desc': 'The question asked by the user'}, 'metadata': {'type': 'dict', 'desc': 'The metadata of the question'}}, 'required': ['question']}) (required)",

"label": "The label of the question (int) (optional)",

"metadata": "The metadata of the question (dict) (required)"

}

To yaml signature:

question: The question asked by the user ({'type': 'Question', 'properties': {'question': {'type': 'str', 'desc': 'The question asked by the user'}, 'metadata': {'type': 'dict', 'desc': 'The metadata of the question'}}, 'required': ['question']}) (required)

label: The label of the question (int) (optional)

metadata: The metadata of the question (dict) (required)

Note

If you use schema (json string) to instruct LLMs to output yaml data, the LLMs might get confused and can potentially output json data instead.

Exclude

Now, if you decide to not show some fields in the output, you can use the exclude parameter in the methods.

Let’s exclude both the metadata from class TrecData2 and the metadata from class Question:

json_signature_exclude = TrecData2.to_json_signature(exclude={"TrecData2": ["metadata"], "Question": ["metadata"]})

print(json_signature_exclude)

The output will be:

{

"question": "The question asked by the user ({'type': 'Question', 'properties': {'question': {'type': 'str', 'desc': 'The question asked by the user'}}, 'required': ['question']}) (required)",

"label": "The label of the question (int) (optional)"

}

If you only want to exclude the metadata from class TrecData2- the outer class, you can pass a list of strings simply:

json_signature_exclude = TrecData2.to_json_signature(exclude=["metadata"])

print(json_signature_exclude)

The output will be:

{

"question": "The question asked by the user ({'type': 'Question', 'properties': {'question': {'type': 'str', 'desc': 'The question asked by the user'}, 'metadata': {'type': 'dict', 'desc': 'The metadata of the question'}}, 'required': ['question']}) (required)",

"label": "The label of the question (int) (optional)"

}

The exclude parameter works the same across all methods.

DataClassFormatType

For data class format, we have DataClassFormatType along with format_class_str method to specify the format type for the data format methods.

from adalflow.core import DataClassFormatType

json_signature = TrecData2.format_class_str(DataClassFormatType.SIGNATURE_JSON)

print(json_signature)

yaml_signature = TrecData2.format_class_str(DataClassFormatType.SIGNATURE_YAML)

print(yaml_signature)

schema = TrecData2.format_class_str(DataClassFormatType.SCHEMA)

print(schema)

Show data examples & parse string to data instance¶

Our functionality on data instance will help you show data examples to LLMs.

This is mainly done via to_dict method, which you can further convert to json or yaml string.

To convert the raw string back to the data instance, either from json or yaml string, we leverage class method from_dict.

So it is important for DataClass to be able to ensure the reconstructed data instance is the same as the original data instance.

Here is how you can do it with a DataClass subclass:

example = TrecData2(Question("What is the capital of France?"), 1, {"key": "value"})

print(example)

dict_example = example.to_dict()

print(dict_example)

reconstructed = TrecData2.from_dict(dict_example)

print(reconstructed)

print(reconstructed == example)

The output will be:

TrecData2(question=Question(question='What is the capital of France?', metadata={}), label=1, metadata={'key': 'value'})

{'question': {'question': 'What is the capital of France?', 'metadata': {}}, 'label': 1, 'metadata': {'key': 'value'}}

TrecData2(question=Question(question='What is the capital of France?', metadata={}), label=1, metadata={'key': 'value'})

True

On top of from_dict and to_dict, we make sure you can also directly work with:

from_yaml(from yaml string to reconstruct instance) andto_yaml(a yaml string)from_json(from json string to reconstruct instance) andto_json(a json string)

Here is how it works with DataClass subclass:

json_str = example.to_json()

print(json_str)

yaml_str = example.to_yaml(example)

print(yaml_str)

reconstructed_from_json = TrecData2.from_json(json_str)

print(reconstructed_from_json)

print(reconstructed_from_json == example)

reconstructed_from_yaml = TrecData2.from_yaml(yaml_str)

print(reconstructed_from_yaml)

print(reconstructed_from_yaml == example)

The output will be:

{

"question": {

"question": "What is the capital of France?",

"metadata": {}

},

"label": 1,

"metadata": {

"key": "value"

}

}

question:

question: What is the capital of France?

metadata: {}

label: 1

metadata:

key: value

TrecData2(question=Question(question='What is the capital of France?', metadata={}), label=1, metadata={'key': 'value'})

True

TrecData2(question=Question(question='What is the capital of France?', metadata={}), label=1, metadata={'key': 'value'})

True

Similarly, (1) all to_dict, to_json, and to_yaml works with exclude parameter to exclude some fields from the output,

(2) you can use DataClassFormatType along with format_example_str method to specify the format type for the data example methods.

from adalflow.core import DataClassFormatType

example_str = example.format_example_str(DataClassFormatType.EXAMPLE_JSON)

print(example_str)

example_str = example.format_example_str(DataClassFormatType.EXAMPLE_YAML)

print(example_str)

Load data from dataset as example¶

As we need to load or create an instance from a dataset, which is typically from Pytorch dataset or huggingface dataset and each data point is in the form of a dictionary.

How you want to describe your data format to LLMs might not match to the existing dataset’s key and the field name. You can simply do a bit customization to map the dataset’s key to the field name in your data class.

@dataclass

class OutputFormat(DataClass):

thought: str = field(

metadata={

"desc": "Your reasoning to classify the question to class_name",

}

)

class_name: str = field(metadata={"desc": "class_name"})

class_index: int = field(metadata={"desc": "class_index in range[0, 5]"})

@classmethod

def from_dict(cls, data: Dict[str, object]):

_COARSE_LABELS_DESC = [

"Abbreviation",

"Entity",

"Description and abstract concept",

"Human being",

"Location",

"Numeric value",

]

data = {

"thought": None,

"class_index": data["coarse_label"],

"class_name": _COARSE_LABELS_DESC[data["coarse_label"]],

}

return super().from_dict(data)

Note

If you are looking for data types we used to support each component or any other class like Optimizer, you can check out the core.types file.

About __output_fields__¶

Though you can use exclude in the JsonOutputParser to exclude some fields from the output, it is less readable and less convenient than

directly use __output_fields__ in the data class to signal the output fields and directly work with DataClassParser.

References

Dataclasses: https://docs.python.org/3/library/dataclasses.html