Prompt¶

AdalFlow leverages Jinja2 [1] to programmatically format the prompt for the language model.

By aggregating different parts of the prompt, it is 10X easier to understand the LLM applications.

We created a Prompt class to allow developers to render the prompt with the string template and prompt_kwargs conveniently and securely.

Introduction¶

A prompt is the input text given to a language model(LM) to generate responses. We believe in prompting is the new programming language and developers need to seek maximum control over the prompt.

Data flow in LLM applications¶

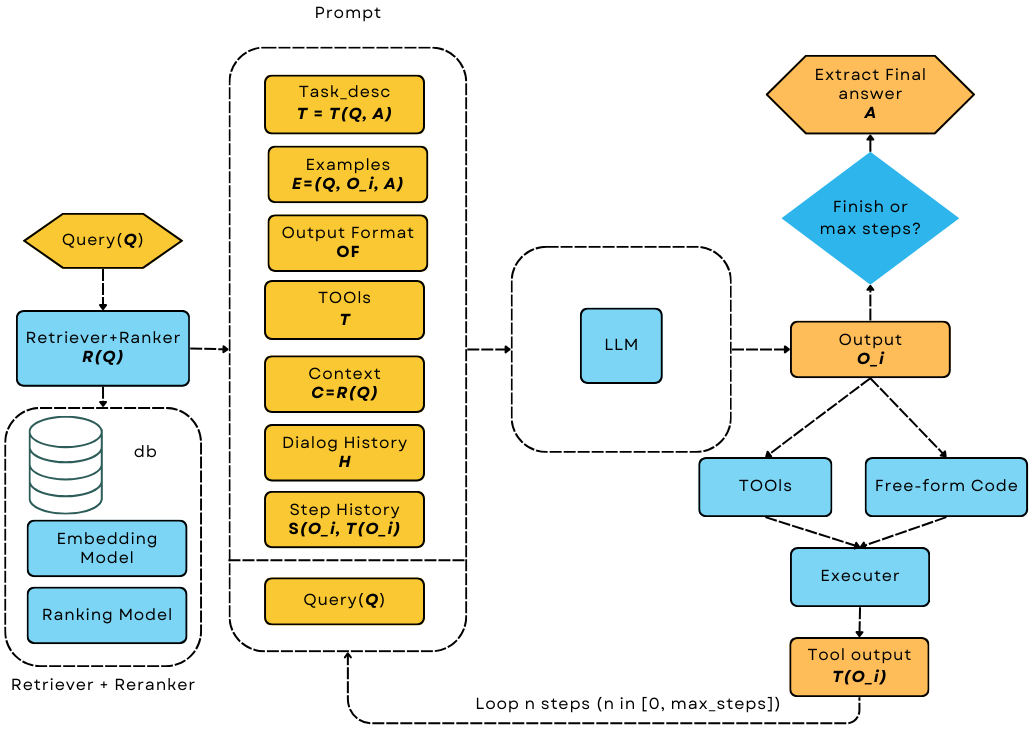

Various LLM app patterns, from RAG to agents, can be implemented via formatting a subpart of the prompt.

Researchers often use special tokens [2] to separate different sections of the prompt, such as the system message, user message, and assistant message. If it is Llama3 model, the final text sent to the model for tokenization will be:

final_prompt = r"""<|begin_of_text|><|start_header_id|>system<|end_header_id|>

{{simple_prompt}} <|eot_id|>"""

And the LLM will return the following text:

prediction = r"""<|start_header_id|>assistant<|end_header_id|> You can ask me anything you want. <|eot_id|><|end_of_text|>"""

However, many proprietary APIs did not disclose their special tokens, and requires users to send them in the forms of messages of different roles.

Use Prompt Class¶

Besides the placeholders using {{}} for keyword arguments, Jinja2 also allow users to write code similar to Python syntax.

This includes conditionals, loops, filters, and even comments, which are lacking in Python’s native string formatting.

In default, the Prompt class uses the DEFAULT_ADALFLOW_SYSTEM_PROMPT as its string template if no template is provided.

But it is super easy to create your own template with Jinja2 syntax.

Here is one example of using Jinja2 to format the prompt with comments {# #} and code blocks {% %}:

import adalflow as adal

template = r"""<START_OF_SYSTEM_MESSAGE>{{ task_desc_str }}<END_OF_SYSTEM_MESSAGE>

{# tools #}

{% if tools %}

<TOOLS>

{% for tool in tools %}

{{loop.index}}. {{ tool }}

{% endfor %}

</TOOLS>{% endif %}

<START_OF_USER>{{ input_str }} <END_OF_USER>"""

task_desc_str = "You are a helpful assitant"

tools = ["google", "wikipedia", "wikidata"]

prompt = adal.Prompt(

template=template,

prompt_kwargs={

"task_desc_str": task_desc_str,

"tools": tools,

},

)

print(prompt(input_str="What is the capital of France?"))

The printout would be:

<START_OF_SYSTEM_MESSAGE>You are a helpful assitant<END_OF_SYSTEM_MESSAGE>

<TOOLS>

1. google

2. wikipedia

3. wikidata

</TOOLS>

<START_OF_USER>What is the capital of France? <END_OF_USER>

As with all components, you can use to_dict and from_dict to serialize and deserialize the component.

Note

In reality, we barely need to use the raw Prompt class directly as it is orchestrated by the Generator.

- Here is the

prompt templatefor REACT agent, it consists of (1) system task description, (2) tools, (3) context variables, (4) output format, (5) user input, and (6) past history. By reading the prompt structure, developers can easily understand the agent’s behavior and functionalities.

You do not need to worry about handling all functionalities of a prompt, we have (1) Parser such as JsonParser, DataClassParser to help you handle the outpt formatting, (2) FuncTool to help you describe a functional tool in the prompt.

References